This was an exercise in recreating the library Micro Grad. Micro Grad is itself a smaller neural network library which mimics PyTorch. The goal was to be get an understanding of how neural networks are implemented in code more directly without having to calculate the derivatives all by hand (one of my previous projects I did it by hand).

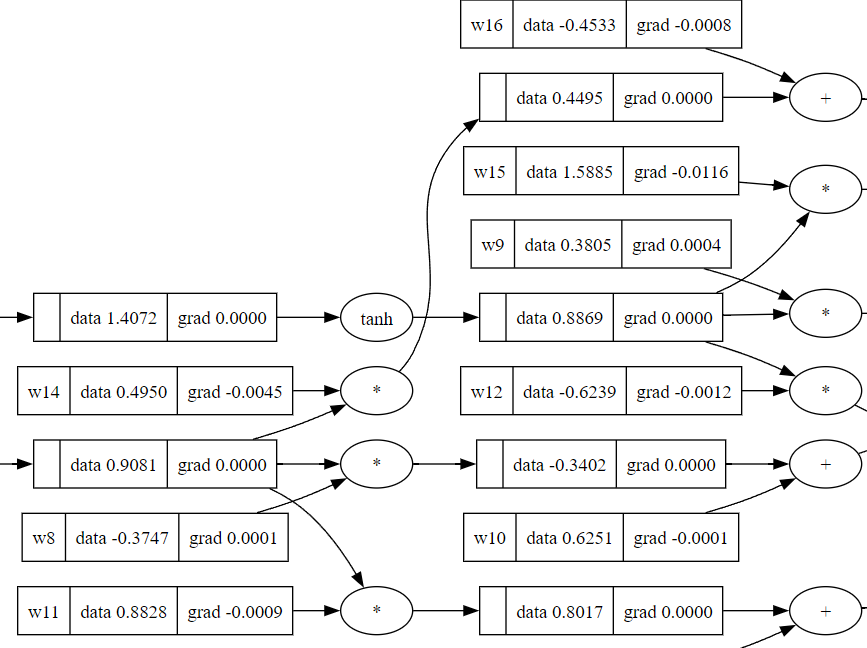

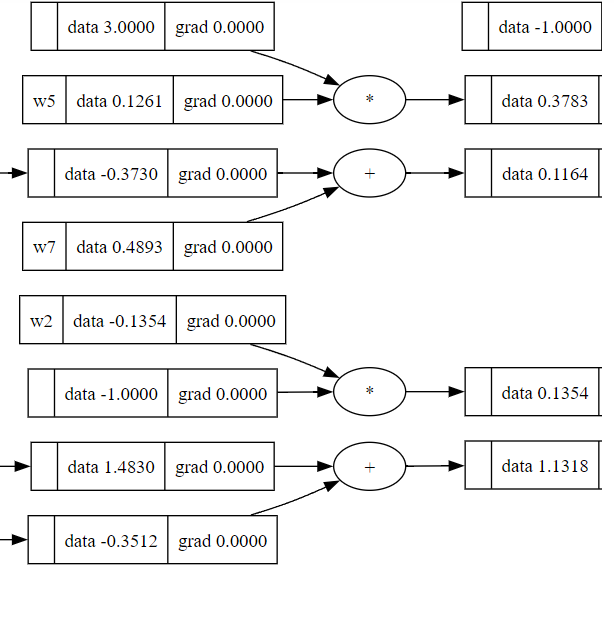

This library I made includes the foundations of a Value(), Neuron(), Layer(), and Network(). The Network class is the one you would mainly use in creating a neural network and it includes a training method as well as functionality to call the Network class in order to perform a forward pass of the network.

The network class allows you to implement only MSE loss and only tanh activation function. However, the groundwork was put in place to add to this later on. Feel free to clone into the GitHub repo and try out the libary for yourself. The API is as simple as calling Network(“# of inputs”, [“structure of network”]).

You can find the code at the GitHub repo here: https://github.com/c-crowder/grad_homemade

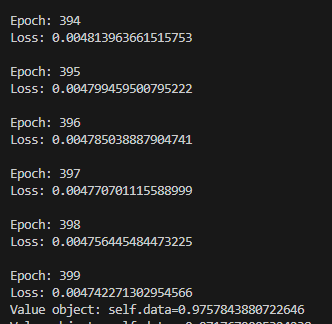

Training Loop

Some Weights Before Training

Some Weights After Training